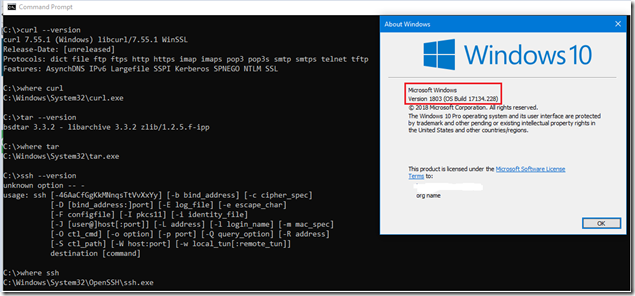

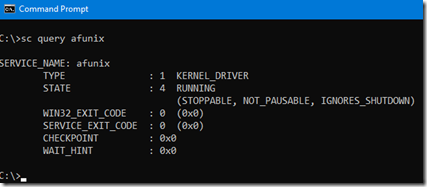

.NET HttpClient is main interface for sending HTTP requests and receiving HTTP responses from a resource identified by a URI. Generally this API supported TCP stack. In .NET 5 , library is extended to support other transports such as Unix Domain Sockets & NamedPipes because many web server on Unix supported HTTP server implementations using Unix Domain Sockets . Windows also added Unix domain socket support in Windows 10 ( for more details see here)

ASP.NET Core web server (Kestrel) also added support for UNIX domain sockets and named pipes( from .NET 8 on-wards).

Alternative transport options is introduced in HttpClient SocketsHttpHandler. With this it is possible to connect to server with Unix Domain Socket or Named Pipes.

HttpClient with Unix Domain Socket

SocketsHttpHandler socketsHttpHandler = new SocketsHttpHandler();

// Custom connection callback that connects to Unixdomain Socket

socketsHttpHandler.ConnectCallback = async (sockHttpConnContext, ctxToken) =>

{

Uri dockerEngineUri = new Uri("unix:///var/run/docker.sock");

var socket = new Socket(AddressFamily.Unix, SocketType.Stream, ProtocolType.IP);

var endpoint = new UnixDomainSocketEndPoint(dockerEngineUri.AbsolutePath);

await socket.ConnectAsync(endpoint, ctxToken);

return new NetworkStream(socket);

};

// create HttpClient with SocketsHttpHandler

var httpClient = new HttpClient(socketsHttpHandler);

// make Http Request .

HttpClient with Named Pipe

SocketsHttpHandler socketsHttpHandler = new SocketsHttpHandler();

// Custom connection callback that connects to NamedPiper server

socketsHttpHandler.ConnectCallback = async (sockHttpConnContext, ctxToken) =>

{

Uri dockerEngineUri = new Uri("npipe://./pipe/docker_engine");

NamedPipeClientStream pipeClientStream = new NamedPipeClientStream(dockerEngineUri.Host,

dockerEngineUri.Segments[2],

PipeDirection.InOut, PipeOptions.Asynchronous);

await pipeClientStream.ConnectAsync(ctxToken);

return pipeClientStream;

};

// create HttpClient with SocketsHttpHandler

var httpClient = new HttpClient(socketsHttpHandler);

// make Http Request .

Complete demonstration sample that uses HttpClient connecting to Docker daemon serving REST API via NamedPipe on Windows & Unix Domain socket on Linux.

using System.IO.Pipes;

using System.Net.Http;

using System.Net.Http.Json;

using System.Net.Sockets;

using System.Runtime.InteropServices;

HttpClient client = CreateHttpClientConnectionToDockerEngine();

String dockerUrl = "http://localhost/v1.41/containers/json";

var containers = client.GetFromJsonAsync<List<Container>>(dockerUrl).GetAwaiter().GetResult();

Console.WriteLine("Container List:...");

foreach (var item in containers)

{

Console.WriteLine(item);

}

// Create HttpClient to Docker Engine using NamedPipe & UnixDomain

HttpClient CreateHttpClientConnectionToDockerEngine()

{

SocketsHttpHandler socketsHttpHandler =

RuntimeInformation.IsOSPlatform(OSPlatform.Windows) switch

{

true => GetSocketHandlerForNamedPipe(),

false => GetSocketHandlerForUnixSocket(),

};

return new HttpClient(socketsHttpHandler);

// Local function to create Handler using NamedPipe

static SocketsHttpHandler GetSocketHandlerForNamedPipe()

{

Console.WriteLine("Connecting to Docker Engine using Named Pipe:");

SocketsHttpHandler socketsHttpHandler = new SocketsHttpHandler();

// Custom connection callback that connects to NamedPiper server

socketsHttpHandler.ConnectCallback = async (sockHttpConnContext, ctxToken) =>

{

Uri dockerEngineUri = new Uri("npipe://./pipe/docker_engine");

NamedPipeClientStream pipeClientStream = new NamedPipeClientStream(dockerEngineUri.Host,

dockerEngineUri.Segments[2],

PipeDirection.InOut, PipeOptions.Asynchronous);

await pipeClientStream.ConnectAsync(ctxToken);

return pipeClientStream;

};

return socketsHttpHandler;

}

// Local function to create Handler using Unix Socket

static SocketsHttpHandler GetSocketHandlerForUnixSocket()

{

Console.WriteLine("Connecting to Docker Engine using Unix Domain Socket:");

SocketsHttpHandler socketsHttpHandler = new SocketsHttpHandler();

// Custom connection callback that connects to Unixdomain Socket

socketsHttpHandler.ConnectCallback = async (sockHttpConnContext, ctxToken) =>

{

Uri dockerEngineUri = new Uri("unix:///var/run/docker.sock");

var socket = new Socket(AddressFamily.Unix, SocketType.Stream, ProtocolType.IP);

var endpoint = new UnixDomainSocketEndPoint(dockerEngineUri.AbsolutePath);

await socket.ConnectAsync(endpoint, ctxToken);

return new NetworkStream(socket);

};

return socketsHttpHandler;

}

}

/// <summary>

/// Record class to hold the container information

/// </summary>

/// <param name="Names"></param>

/// <param name="Image"></param>

/// <param name="ImageID"></param>

/// <param name="Command"></param>

/// <param name="State"></param>

/// <param name="Status"></param>

/// <param name="Created"></param>

public record Container(List<String> Names, String Image, String ImageID,

String Command, String State, String Status,

int Created);

Conclusion:

.NET HttpClient extensibility allows connecting to HTTP server implemented using non TCP transport such as Unix Domain Socket & Named Pipes.

Reference: